Abstract

Recently in robotics, Vision-Language-Action (VLA) models have emerged as a transformative approach, enabling robots to execute complex tasks by integrating visual and linguistic inputs within an end-to-end learning framework. While VLA models offer significant capabilities, they also introduce new attack surfaces, making them vulnerable to adversarial attacks. With these vulnerabilities largely unexplored, this paper systematically quantifies the robustness of VLA-based robotic systems. Recognizing the unique demands of robotic execution, our attack objectives target the inherent spatial and functional characteristics of robotic systems. In particular, we introduce two untargeted attack objectives that leverage spatial foundations to destabilize robotic actions, and a targeted attack objective that manipulates the robotic trajectory. Additionally, we design an adversarial patch generation approach that places a small, colorful patch within the camera's view, effectively executing the attack in both digital and physical environments. Our evaluation reveals a marked degradation in task success rates, with up to a 100% reduction across a suite of simulated robotic tasks, highlighting critical security gaps in current VLA architectures. By unveiling these vulnerabilities and proposing actionable evaluation metrics, we advance both the understanding and enhancement of safety for VLA-based robotic systems, underscoring the necessity for continuously developing robust defense strategies prior to physical-world deployments

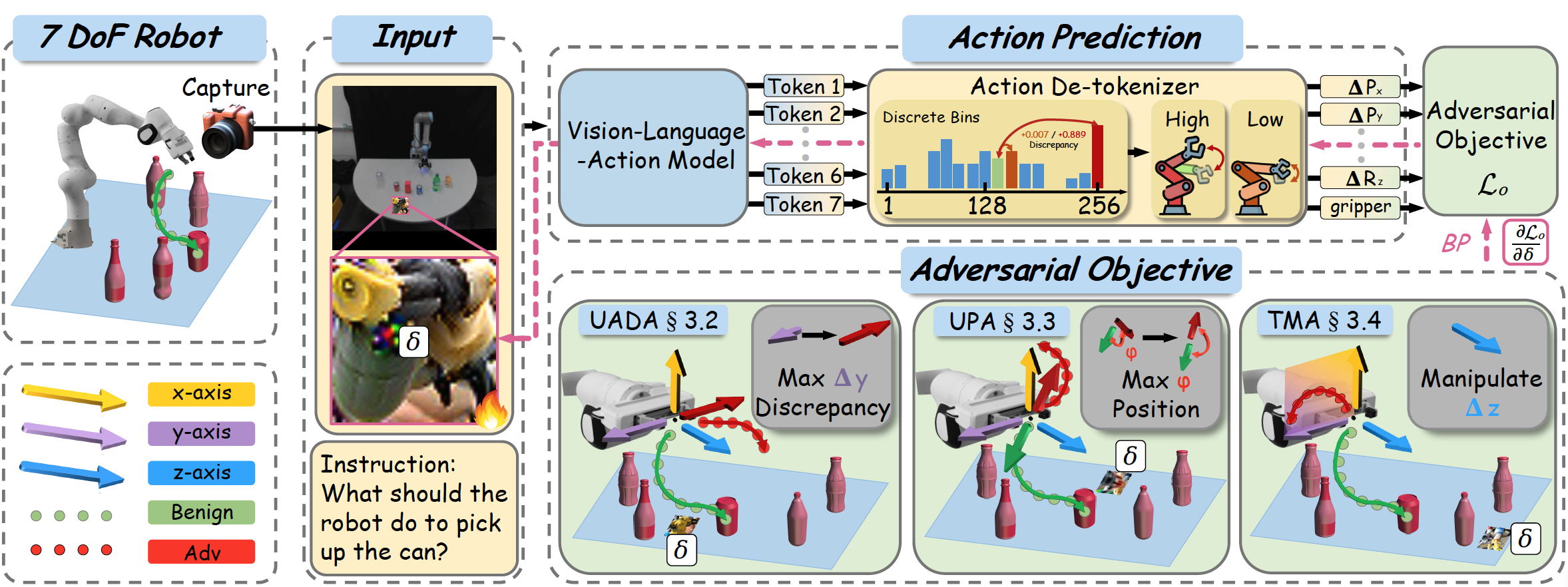

The robot captures an input image, processes it through a vision-language model to generate tokens representing actions, and then uses an action de-tokenizer for discrete bin prediction. The model is optimized with adversarial objectives focusing on various discrepancies and geometries (i.e., UADA, UPA, TMA). Forward propagation is shown in black, and backpropagation is highlighted in pink. These objectives aim to maximize errors and minimize task performance, with visual emphasis on 3D-space manipulation and a focus on generating adversarial perturbation δ during task execution, such as picking up a can.

Figure 1: Overall Adversarial Framework.